Choose The Best Resnet Model For Transfer Learning

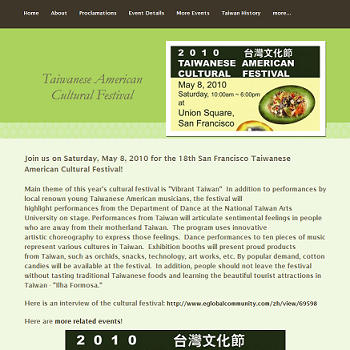

Note: I wrote an app to classify an object using transfer learning, and the model was trained on CIFAR-10 dataset, all by myself.Here's the app:

ResNet Architecture Comparison

Experiment Setup

Now that we have established thatResNet outperforms MobileNet in this project,

the next step is to compare different ResNet variants:

ResNet-18, ResNet-34, and ResNet-50.For all three models, we made sure to unfreeze only layers that actually contain trainable parameters. This ensures that

TRAINABLE_LAYERS behaves consistently and meaningfully across architectures.

Correct Mapping of TRAINABLE_LAYERS

With the corrected unfreezing logic,TRAINABLE_LAYERS now maps to the following ResNet components:

1 → fc2 → layer4 + fc3 → layer3 + layer4 + fc

This configuration allows progressively deeper feature representations to be fine-tuned while keeping the lower-level feature extractors frozen.

Experimental Results

We setTRAINABLE_LAYERS = 3 and ran training for all three ResNet variants.

Below is the corresponding training log:

# ====================== # Config # ====================== NUM_CLASSES = 10 TRAINING_SAMPLE_PER_CLASS = 100 VALIDATION_SAMPLE_PER_CLASS = 100 BATCH_SIZE = 256 EPOCHS = 20 LR = 1e-3 TRAINABLE_LAYERS = 3 # works correctly for ALL models now ============================== Training model: resnet50 ============================== Epoch [01/20] Train Acc: 0.3920 | Val Acc: 0.2620 Epoch [02/20] Train Acc: 0.7860 | Val Acc: 0.4390 Epoch [03/20] Train Acc: 0.8990 | Val Acc: 0.4960 Epoch [04/20] Train Acc: 0.9520 | Val Acc: 0.5370 Epoch [05/20] Train Acc: 0.9510 | Val Acc: 0.5840 Epoch [06/20] Train Acc: 0.9590 | Val Acc: 0.6230 Epoch [07/20] Train Acc: 0.9650 | Val Acc: 0.6840 Epoch [08/20] Train Acc: 0.9790 | Val Acc: 0.6830 Epoch [09/20] Train Acc: 0.9770 | Val Acc: 0.6800 Epoch [10/20] Train Acc: 0.9690 | Val Acc: 0.6440 Epoch [11/20] Train Acc: 0.9740 | Val Acc: 0.6520 Epoch [12/20] Train Acc: 0.9630 | Val Acc: 0.7120 Epoch [13/20] Train Acc: 0.9690 | Val Acc: 0.5790 Epoch [14/20] Train Acc: 0.9820 | Val Acc: 0.6220 Epoch [15/20] Train Acc: 0.9800 | Val Acc: 0.5950 Epoch [16/20] Train Acc: 0.9840 | Val Acc: 0.6450 Epoch [17/20] Train Acc: 0.9800 | Val Acc: 0.6490 Epoch [18/20] Train Acc: 0.9760 | Val Acc: 0.6750 Epoch [19/20] Train Acc: 0.9870 | Val Acc: 0.7250 Epoch [20/20] Train Acc: 0.9860 | Val Acc: 0.7320 ============================== Training model: resnet34 ============================== Epoch [01/20] Train Acc: 0.4920 | Val Acc: 0.2230 Epoch [02/20] Train Acc: 0.8600 | Val Acc: 0.5890 Epoch [03/20] Train Acc: 0.9270 | Val Acc: 0.5990 Epoch [04/20] Train Acc: 0.9520 | Val Acc: 0.6350 Epoch [05/20] Train Acc: 0.9650 | Val Acc: 0.5120 Epoch [06/20] Train Acc: 0.9750 | Val Acc: 0.5900 Epoch [07/20] Train Acc: 0.9770 | Val Acc: 0.6890 Epoch [08/20] Train Acc: 0.9810 | Val Acc: 0.7050 Epoch [09/20] Train Acc: 0.9850 | Val Acc: 0.7530 Epoch [10/20] Train Acc: 0.9880 | Val Acc: 0.7560 Epoch [11/20] Train Acc: 0.9920 | Val Acc: 0.7500 Epoch [12/20] Train Acc: 0.9870 | Val Acc: 0.7350 Epoch [13/20] Train Acc: 0.9900 | Val Acc: 0.7450 Epoch [14/20] Train Acc: 0.9910 | Val Acc: 0.7520 Epoch [15/20] Train Acc: 0.9950 | Val Acc: 0.7320 Epoch [16/20] Train Acc: 0.9940 | Val Acc: 0.7740 Epoch [17/20] Train Acc: 0.9940 | Val Acc: 0.7610 Epoch [18/20] Train Acc: 0.9960 | Val Acc: 0.7510 Epoch [19/20] Train Acc: 0.9950 | Val Acc: 0.7660 Epoch [20/20] Train Acc: 0.9970 | Val Acc: 0.7420 ============================== Training model: resnet18 ============================== Epoch [01/20] Train Acc: 0.4980 | Val Acc: 0.3790 Epoch [02/20] Train Acc: 0.8540 | Val Acc: 0.4420 Epoch [03/20] Train Acc: 0.9200 | Val Acc: 0.6820 Epoch [04/20] Train Acc: 0.9690 | Val Acc: 0.7240 Epoch [05/20] Train Acc: 0.9940 | Val Acc: 0.7160 Epoch [06/20] Train Acc: 0.9890 | Val Acc: 0.7620 Epoch [07/20] Train Acc: 0.9970 | Val Acc: 0.7530 Epoch [08/20] Train Acc: 0.9950 | Val Acc: 0.7650 Epoch [09/20] Train Acc: 0.9940 | Val Acc: 0.7870 Epoch [10/20] Train Acc: 0.9990 | Val Acc: 0.7800 Epoch [11/20] Train Acc: 0.9980 | Val Acc: 0.7820 Epoch [12/20] Train Acc: 1.0000 | Val Acc: 0.7900 Epoch [13/20] Train Acc: 0.9980 | Val Acc: 0.8040 Epoch [14/20] Train Acc: 0.9990 | Val Acc: 0.7970 Epoch [15/20] Train Acc: 1.0000 | Val Acc: 0.7980 Epoch [16/20] Train Acc: 1.0000 | Val Acc: 0.7820 Epoch [17/20] Train Acc: 1.0000 | Val Acc: 0.7690 Epoch [18/20] Train Acc: 1.0000 | Val Acc: 0.7830 Epoch [19/20] Train Acc: 1.0000 | Val Acc: 0.8010 Epoch [20/20] Train Acc: 1.0000 | Val Acc: 0.8140

The validation accuracies were as follows:

– ResNet-18: ~81%

– ResNet-34: ~77%

– ResNet-50: ~73%

Why ResNet-18 Performs Best

At first glance, it may seem counterintuitive that the smallerResNet-18 outperforms

its deeper counterparts. However, this behavior is expected given the constraints of our dataset

and training setup.First,

ResNet-34 and ResNet-50 contain significantly more parameters,

which increases their representational capacity but also raises the risk of overfitting when

training data is limited.Second, deeper networks require more data to fully leverage their hierarchical feature depth. Without sufficient samples, higher-level layers may learn spurious correlations rather than generalizable patterns.

Third, optimization becomes more challenging as model depth increases. Even with residual connections, deeper models are more sensitive to learning rate choices, regularization strength, and batch statistics, especially during fine-tuning.

Finally, the task itself may not require the additional semantic complexity offered by deeper architectures. In such cases, a lighter model like

ResNet-18 strikes a better balance

between capacity and generalization.

Conclusion and Next Steps

Based on these findings, we selectResNet-18 as the backbone for further optimization.

In the next phase, we will focus on improving its performance through more advanced fine-tuning

strategies, data augmentation, and hyperparameter tuning.Stay tuned.

Any comments? Feel free to participate below in the Facebook comment section.