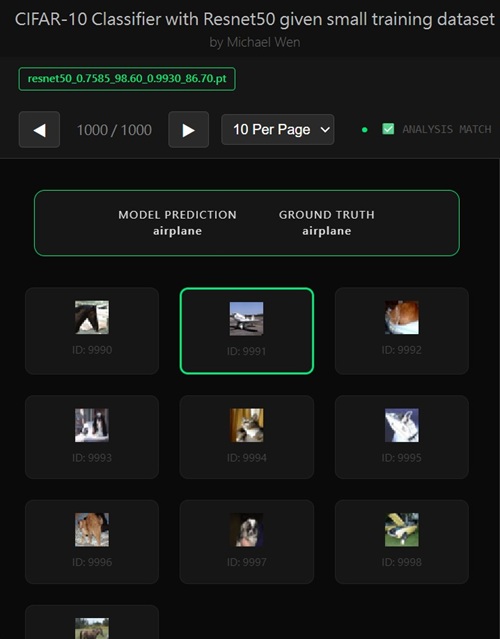

Data Augmentation Experiment

I wrote an app to classify an object using transfer learning, and the model was trained on CIFAR-10 dataset, all by myself.

Effect of Data Augmentation on Validation Accuracy

Experiment Setup

We conducted a series of experiments to evaluate the effect of data augmentation onResNet-18 validation performance. Two augmentation pipelines were tested:

a standard augmentation and a more complex augmentation with stronger transformations.

Augmentation Pipelines

1. Standard Augmentation:

train_transform = transforms.Compose([

transforms.Resize(224),

transforms.RandomHorizontalFlip(),

transforms.RandomCrop(224, padding=8),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

2. Complex Augmentation:

train_transform = transforms.Compose([

transforms.Resize(224),

transforms.RandomResizedCrop(224, scale=(0.7, 1.0)),

transforms.RandomHorizontalFlip(),

transforms.ColorJitter(

brightness=0.2,

contrast=0.2,

saturation=0.2,

hue=0.05

),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

Validation Results

| Augmentation Type | N_AUG | Validation Accuracy |

|---|---|---|

| No Augmentation | - | 78.90% |

| Standard Augmentation | 1 | 80.60% |

| 3 | 82.40% | |

| 4 | 82.60% | |

| 5 | 81.80% | |

| Complex Augmentation | 5 | 79.90% |

Analysis and Observations

- Introducing even a simple augmentation pipeline improved validation accuracy from 78.90% to over 82% at optimalN_AUG, highlighting

the importance of data diversity in transfer learning.- Increasing

N_AUG beyond 4 did not further improve performance, suggesting

that excessive augmentation may introduce redundant or noisy transformations.- Surprisingly, the more complex augmentation pipeline resulted in slightly lower validation accuracy (~79.90%), indicating that overly aggressive transformations can distort input features beyond the range the pretrained model expects.

- These results confirm a key principle: augmentation should increase useful variability without deviating from the original feature distribution that the pretrained model has learned to interpret.

Conclusion

- Moderate augmentation with carefully tunedN_AUG is optimal for

ResNet-18 fine-tuning on CIFAR-10.- Excessive or complex augmentation may harm performance due to over-perturbation of input features.

- This experiment demonstrates that thoughtful augmentation is a simple yet highly effective lever to improve transfer learning outcomes.

Raw Log for Your Reference

Here's the training log for when there's no data augmentation, meaning train_transform looks like this:train_transform = transforms.Compose([

transforms.Resize(224),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

Here's the corresponding training log:

============================== Training model: resnet18 ============================== Epoch [01/20] Train Loss: 1.3370 | Val Loss: 1.9445 | Train Acc: 55.20% | Val Acc: 54.10% Epoch [02/20] Train Loss: 0.3165 | Val Loss: 2.0112 | Train Acc: 90.50% | Val Acc: 58.10% Epoch [03/20] Train Loss: 0.1490 | Val Loss: 1.3208 | Train Acc: 96.30% | Val Acc: 68.60% Epoch [04/20] Train Loss: 0.0754 | Val Loss: 1.0769 | Train Acc: 98.50% | Val Acc: 71.80% Epoch [05/20] Train Loss: 0.0518 | Val Loss: 1.0204 | Train Acc: 98.90% | Val Acc: 71.90% Epoch [06/20] Train Loss: 0.0154 | Val Loss: 0.9471 | Train Acc: 99.90% | Val Acc: 73.90% Epoch [07/20] Train Loss: 0.0095 | Val Loss: 0.9050 | Train Acc: 100.00% | Val Acc: 75.10% Epoch [08/20] Train Loss: 0.0071 | Val Loss: 0.8919 | Train Acc: 100.00% | Val Acc: 75.50% Epoch [09/20] Train Loss: 0.0036 | Val Loss: 0.8561 | Train Acc: 100.00% | Val Acc: 75.80% Epoch [10/20] Train Loss: 0.0023 | Val Loss: 0.8120 | Train Acc: 100.00% | Val Acc: 76.50% Epoch [11/20] Train Loss: 0.0028 | Val Loss: 0.7939 | Train Acc: 100.00% | Val Acc: 76.90% Epoch [12/20] Train Loss: 0.0016 | Val Loss: 0.7637 | Train Acc: 100.00% | Val Acc: 77.50% Epoch [13/20] Train Loss: 0.0013 | Val Loss: 0.7415 | Train Acc: 100.00% | Val Acc: 78.30% Epoch [14/20] Train Loss: 0.0012 | Val Loss: 0.7292 | Train Acc: 100.00% | Val Acc: 78.00% Epoch [15/20] Train Loss: 0.0010 | Val Loss: 0.7215 | Train Acc: 100.00% | Val Acc: 78.50% Epoch [16/20] Train Loss: 0.0009 | Val Loss: 0.7136 | Train Acc: 100.00% | Val Acc: 78.70% Epoch [17/20] Train Loss: 0.0007 | Val Loss: 0.7087 | Train Acc: 100.00% | Val Acc: 78.90% Epoch [18/20] Train Loss: 0.0006 | Val Loss: 0.7132 | Train Acc: 100.00% | Val Acc: 78.60% Epoch [19/20] Train Loss: 0.0005 | Val Loss: 0.7098 | Train Acc: 100.00% | Val Acc: 78.70% Epoch [20/20] Train Loss: 0.0006 | Val Loss: 0.7075 | Train Acc: 100.00% | Val Acc: 78.30% Best results for resnet18: Train Loss: 0.0007 | Val Loss: 0.7087 | Train Acc: 100.00% | Val Acc: 78.90% Training Time: 76.41 secondsFrom here on out, let's do data augmentation using standard image augmentation techniques, here's the transform code:

train_transform = transforms.Compose([

transforms.Resize(224),

transforms.RandomHorizontalFlip(),

transforms.RandomCrop(224, padding=8),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

Here's the training log for different values of N_AUG.

========== CONFIG ========== NUM_CLASSES = 10 TRAINING_SAMPLE_PER_CLASS = 100 VALIDATION_SAMPLE_PER_CLASS = 100 BATCH_SIZE = 256 EPOCHS = 20 LR = 0.001 TRAINABLE_LAYERS = 2 EARLY_STOP_PATIENCE = 10 N_AUG = 1 DEVICE = cuda ============================ ============================== Training model: resnet18 ============================== Epoch [01/20] Train Loss: 1.3549 | Val Loss: 1.5754 | Train Acc: 54.10% | Val Acc: 62.40% Epoch [02/20] Train Loss: 0.4315 | Val Loss: 2.0113 | Train Acc: 86.00% | Val Acc: 61.90% Epoch [03/20] Train Loss: 0.3334 | Val Loss: 1.7052 | Train Acc: 90.00% | Val Acc: 66.10% Epoch [04/20] Train Loss: 0.2071 | Val Loss: 1.3286 | Train Acc: 93.40% | Val Acc: 69.70% Epoch [05/20] Train Loss: 0.1246 | Val Loss: 1.1073 | Train Acc: 96.50% | Val Acc: 74.50% Epoch [06/20] Train Loss: 0.0770 | Val Loss: 1.1168 | Train Acc: 97.80% | Val Acc: 73.80% Epoch [07/20] Train Loss: 0.0704 | Val Loss: 1.0630 | Train Acc: 97.70% | Val Acc: 75.10% Epoch [08/20] Train Loss: 0.0363 | Val Loss: 0.9845 | Train Acc: 99.30% | Val Acc: 76.10% Epoch [09/20] Train Loss: 0.0340 | Val Loss: 0.8410 | Train Acc: 99.30% | Val Acc: 77.90% Epoch [10/20] Train Loss: 0.0251 | Val Loss: 0.8184 | Train Acc: 99.80% | Val Acc: 78.70% Epoch [11/20] Train Loss: 0.0250 | Val Loss: 0.8143 | Train Acc: 99.50% | Val Acc: 78.40% Epoch [12/20] Train Loss: 0.0146 | Val Loss: 0.8361 | Train Acc: 99.80% | Val Acc: 78.10% Epoch [13/20] Train Loss: 0.0115 | Val Loss: 0.7682 | Train Acc: 100.00% | Val Acc: 79.40% Epoch [14/20] Train Loss: 0.0064 | Val Loss: 0.7347 | Train Acc: 100.00% | Val Acc: 80.00% Epoch [15/20] Train Loss: 0.0059 | Val Loss: 0.7145 | Train Acc: 100.00% | Val Acc: 79.10% Epoch [16/20] Train Loss: 0.0088 | Val Loss: 0.7160 | Train Acc: 99.80% | Val Acc: 80.50% Epoch [17/20] Train Loss: 0.0044 | Val Loss: 0.7340 | Train Acc: 100.00% | Val Acc: 80.60% Epoch [18/20] Train Loss: 0.0035 | Val Loss: 0.7265 | Train Acc: 100.00% | Val Acc: 80.10% Epoch [19/20] Train Loss: 0.0040 | Val Loss: 0.7524 | Train Acc: 99.90% | Val Acc: 79.50% Epoch [20/20] Train Loss: 0.0026 | Val Loss: 0.7415 | Train Acc: 100.00% | Val Acc: 79.40% Best results for resnet18: Train Loss: 0.0044 | Val Loss: 0.7340 | Train Acc: 100.00% | Val Acc: 80.60% Training Time: 78.92 seconds ========== CONFIG ========== NUM_CLASSES = 10 TRAINING_SAMPLE_PER_CLASS = 100 VALIDATION_SAMPLE_PER_CLASS = 100 BATCH_SIZE = 256 EPOCHS = 20 LR = 0.001 TRAINABLE_LAYERS = 2 EARLY_STOP_PATIENCE = 10 N_AUG = 3 DEVICE = cuda ============================ ============================== Training model: resnet18 ============================== Epoch [01/20] Train Loss: 0.6881 | Val Loss: 1.4389 | Train Acc: 77.50% | Val Acc: 67.70% Epoch [02/20] Train Loss: 0.1263 | Val Loss: 0.9544 | Train Acc: 96.27% | Val Acc: 77.40% Epoch [03/20] Train Loss: 0.0448 | Val Loss: 0.8698 | Train Acc: 99.03% | Val Acc: 77.70% Epoch [04/20] Train Loss: 0.0215 | Val Loss: 0.7644 | Train Acc: 99.40% | Val Acc: 78.60% Epoch [05/20] Train Loss: 0.0121 | Val Loss: 0.8842 | Train Acc: 99.73% | Val Acc: 78.20% Epoch [06/20] Train Loss: 0.0132 | Val Loss: 0.9081 | Train Acc: 99.63% | Val Acc: 78.30% Epoch [07/20] Train Loss: 0.0066 | Val Loss: 0.8199 | Train Acc: 99.90% | Val Acc: 79.00% Epoch [08/20] Train Loss: 0.0035 | Val Loss: 0.8092 | Train Acc: 99.97% | Val Acc: 78.20% Epoch [09/20] Train Loss: 0.0032 | Val Loss: 0.7783 | Train Acc: 99.97% | Val Acc: 80.10% Epoch [10/20] Train Loss: 0.0013 | Val Loss: 0.7581 | Train Acc: 100.00% | Val Acc: 80.50% Epoch [11/20] Train Loss: 0.0010 | Val Loss: 0.7460 | Train Acc: 100.00% | Val Acc: 80.50% Epoch [12/20] Train Loss: 0.0006 | Val Loss: 0.7176 | Train Acc: 100.00% | Val Acc: 81.30% Epoch [13/20] Train Loss: 0.0008 | Val Loss: 0.7047 | Train Acc: 100.00% | Val Acc: 81.50% Epoch [14/20] Train Loss: 0.0005 | Val Loss: 0.7091 | Train Acc: 100.00% | Val Acc: 81.70% Epoch [15/20] Train Loss: 0.0005 | Val Loss: 0.7083 | Train Acc: 100.00% | Val Acc: 81.20% Epoch [16/20] Train Loss: 0.0006 | Val Loss: 0.7202 | Train Acc: 100.00% | Val Acc: 81.90% Epoch [17/20] Train Loss: 0.0004 | Val Loss: 0.7152 | Train Acc: 100.00% | Val Acc: 81.90% Epoch [18/20] Train Loss: 0.0004 | Val Loss: 0.7152 | Train Acc: 100.00% | Val Acc: 82.20% Epoch [19/20] Train Loss: 0.0004 | Val Loss: 0.7131 | Train Acc: 100.00% | Val Acc: 82.40% Epoch [20/20] Train Loss: 0.0003 | Val Loss: 0.7060 | Train Acc: 100.00% | Val Acc: 82.30% Best results for resnet18: Train Loss: 0.0004 | Val Loss: 0.7131 | Train Acc: 100.00% | Val Acc: 82.40% Training Time: 122.17 seconds ========== CONFIG ========== NUM_CLASSES = 10 TRAINING_SAMPLE_PER_CLASS = 100 VALIDATION_SAMPLE_PER_CLASS = 100 BATCH_SIZE = 256 EPOCHS = 20 LR = 0.001 TRAINABLE_LAYERS = 2 EARLY_STOP_PATIENCE = 10 N_AUG = 4 DEVICE = cuda ============================ ============================== Training model: resnet18 ============================== Epoch [01/20] Train Loss: 0.5575 | Val Loss: 1.0303 | Train Acc: 81.97% | Val Acc: 74.00% Epoch [02/20] Train Loss: 0.0700 | Val Loss: 0.9473 | Train Acc: 98.08% | Val Acc: 76.50% Epoch [03/20] Train Loss: 0.0239 | Val Loss: 0.7925 | Train Acc: 99.55% | Val Acc: 78.50% Epoch [04/20] Train Loss: 0.0103 | Val Loss: 0.7661 | Train Acc: 99.83% | Val Acc: 79.90% Epoch [05/20] Train Loss: 0.0077 | Val Loss: 0.8783 | Train Acc: 99.83% | Val Acc: 77.70% Epoch [06/20] Train Loss: 0.0073 | Val Loss: 0.8420 | Train Acc: 99.85% | Val Acc: 79.40% Epoch [07/20] Train Loss: 0.0032 | Val Loss: 0.7737 | Train Acc: 99.95% | Val Acc: 80.30% Epoch [08/20] Train Loss: 0.0021 | Val Loss: 0.7466 | Train Acc: 100.00% | Val Acc: 80.20% Epoch [09/20] Train Loss: 0.0012 | Val Loss: 0.7240 | Train Acc: 100.00% | Val Acc: 81.30% Epoch [10/20] Train Loss: 0.0007 | Val Loss: 0.7333 | Train Acc: 100.00% | Val Acc: 80.70% Epoch [11/20] Train Loss: 0.0006 | Val Loss: 0.7475 | Train Acc: 100.00% | Val Acc: 81.40% Epoch [12/20] Train Loss: 0.0004 | Val Loss: 0.7163 | Train Acc: 100.00% | Val Acc: 81.80% Epoch [13/20] Train Loss: 0.0004 | Val Loss: 0.7068 | Train Acc: 100.00% | Val Acc: 82.60% Epoch [14/20] Train Loss: 0.0003 | Val Loss: 0.7128 | Train Acc: 100.00% | Val Acc: 81.90% Epoch [15/20] Train Loss: 0.0003 | Val Loss: 0.7149 | Train Acc: 100.00% | Val Acc: 81.90% Epoch [16/20] Train Loss: 0.0003 | Val Loss: 0.7346 | Train Acc: 100.00% | Val Acc: 82.10% Epoch [17/20] Train Loss: 0.0003 | Val Loss: 0.7218 | Train Acc: 100.00% | Val Acc: 82.30% Epoch [18/20] Train Loss: 0.0003 | Val Loss: 0.7346 | Train Acc: 100.00% | Val Acc: 82.00% Epoch [19/20] Train Loss: 0.0003 | Val Loss: 0.7266 | Train Acc: 100.00% | Val Acc: 82.20% Epoch [20/20] Train Loss: 0.0002 | Val Loss: 0.7311 | Train Acc: 100.00% | Val Acc: 82.20% Best results for resnet18: Train Loss: 0.0004 | Val Loss: 0.7068 | Train Acc: 100.00% | Val Acc: 82.60% Training Time: 144.81 seconds ========== CONFIG ========== NUM_CLASSES = 10 TRAINING_SAMPLE_PER_CLASS = 100 VALIDATION_SAMPLE_PER_CLASS = 100 BATCH_SIZE = 256 EPOCHS = 20 LR = 0.001 TRAINABLE_LAYERS = 2 EARLY_STOP_PATIENCE = 10 N_AUG = 5 DEVICE = cuda ============================ ============================== Training model: resnet18 ============================== Epoch [01/20] Train Loss: 0.4689 | Val Loss: 1.2141 | Train Acc: 84.92% | Val Acc: 72.50% Epoch [02/20] Train Loss: 0.0470 | Val Loss: 0.9456 | Train Acc: 98.86% | Val Acc: 75.30% Epoch [03/20] Train Loss: 0.0149 | Val Loss: 0.7469 | Train Acc: 99.76% | Val Acc: 79.80% Epoch [04/20] Train Loss: 0.0066 | Val Loss: 0.7828 | Train Acc: 99.88% | Val Acc: 78.50% Epoch [05/20] Train Loss: 0.0033 | Val Loss: 0.8726 | Train Acc: 99.94% | Val Acc: 78.20% Epoch [06/20] Train Loss: 0.0016 | Val Loss: 0.7470 | Train Acc: 100.00% | Val Acc: 80.60% Epoch [07/20] Train Loss: 0.0009 | Val Loss: 0.7269 | Train Acc: 100.00% | Val Acc: 81.00% Epoch [08/20] Train Loss: 0.0010 | Val Loss: 0.8140 | Train Acc: 99.98% | Val Acc: 79.60% Epoch [09/20] Train Loss: 0.0013 | Val Loss: 0.8110 | Train Acc: 99.98% | Val Acc: 79.70% Epoch [10/20] Train Loss: 0.0007 | Val Loss: 0.7776 | Train Acc: 100.00% | Val Acc: 80.50% Epoch [11/20] Train Loss: 0.0005 | Val Loss: 0.7703 | Train Acc: 100.00% | Val Acc: 80.90% Epoch [12/20] Train Loss: 0.0003 | Val Loss: 0.7382 | Train Acc: 100.00% | Val Acc: 80.80% Epoch [13/20] Train Loss: 0.0003 | Val Loss: 0.7256 | Train Acc: 100.00% | Val Acc: 81.80% Epoch [14/20] Train Loss: 0.0002 | Val Loss: 0.7374 | Train Acc: 100.00% | Val Acc: 81.60% Epoch [15/20] Train Loss: 0.0002 | Val Loss: 0.7457 | Train Acc: 100.00% | Val Acc: 81.00% Epoch [16/20] Train Loss: 0.0002 | Val Loss: 0.7578 | Train Acc: 100.00% | Val Acc: 81.00% Epoch [17/20] Train Loss: 0.0002 | Val Loss: 0.7834 | Train Acc: 100.00% | Val Acc: 81.10% Epoch [18/20] Train Loss: 0.0002 | Val Loss: 0.7569 | Train Acc: 100.00% | Val Acc: 81.70% Epoch [19/20] Train Loss: 0.0002 | Val Loss: 0.7576 | Train Acc: 100.00% | Val Acc: 80.90% Epoch [20/20] Train Loss: 0.0002 | Val Loss: 0.7647 | Train Acc: 100.00% | Val Acc: 81.10% Best results for resnet18: Train Loss: 0.0003 | Val Loss: 0.7256 | Train Acc: 100.00% | Val Acc: 81.80% Training Time: 166.25 seconds

Judging by the results, the optimal value for N_AUG is 3 or 4. Let's use more complex transform to see if it improves validation accuracy. Here's the code and log:

train_transform = transforms.Compose([

transforms.Resize(224),

transforms.RandomResizedCrop(224, scale=(0.7, 1.0)),

transforms.RandomHorizontalFlip(),

transforms.ColorJitter(

brightness=0.2,

contrast=0.2,

saturation=0.2,

hue=0.05

),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

========== CONFIG ==========

NUM_CLASSES = 10

TRAINING_SAMPLE_PER_CLASS = 100

VALIDATION_SAMPLE_PER_CLASS = 100

BATCH_SIZE = 256

EPOCHS = 20

LR = 0.001

TRAINABLE_LAYERS = 2

EARLY_STOP_PATIENCE = 10

N_AUG = 5

DEVICE = cuda

============================

==============================

Training model: resnet18

==============================

Epoch [01/20] Train Loss: 0.5717 | Val Loss: 1.2045 | Train Acc: 81.44% | Val Acc: 71.10%

Epoch [02/20] Train Loss: 0.1210 | Val Loss: 0.8994 | Train Acc: 96.42% | Val Acc: 75.70%

Epoch [03/20] Train Loss: 0.0603 | Val Loss: 0.9678 | Train Acc: 98.34% | Val Acc: 75.20%

Epoch [04/20] Train Loss: 0.0402 | Val Loss: 0.8390 | Train Acc: 98.90% | Val Acc: 78.10%

Epoch [05/20] Train Loss: 0.0242 | Val Loss: 0.7963 | Train Acc: 99.44% | Val Acc: 78.90%

Epoch [06/20] Train Loss: 0.0218 | Val Loss: 0.9132 | Train Acc: 99.40% | Val Acc: 77.00%

Epoch [07/20] Train Loss: 0.0134 | Val Loss: 0.8210 | Train Acc: 99.68% | Val Acc: 78.60%

Epoch [08/20] Train Loss: 0.0095 | Val Loss: 0.8353 | Train Acc: 99.86% | Val Acc: 78.40%

Epoch [09/20] Train Loss: 0.0094 | Val Loss: 0.9591 | Train Acc: 99.74% | Val Acc: 76.90%

Epoch [10/20] Train Loss: 0.0058 | Val Loss: 0.9056 | Train Acc: 99.84% | Val Acc: 79.90%

Epoch [11/20] Train Loss: 0.0114 | Val Loss: 1.0335 | Train Acc: 99.70% | Val Acc: 76.70%

Epoch [12/20] Train Loss: 0.0146 | Val Loss: 1.0974 | Train Acc: 99.50% | Val Acc: 76.20%

Epoch [13/20] Train Loss: 0.0155 | Val Loss: 1.0826 | Train Acc: 99.60% | Val Acc: 75.90%

Epoch [14/20] Train Loss: 0.0179 | Val Loss: 1.0649 | Train Acc: 99.48% | Val Acc: 73.90%

Epoch [15/20] Train Loss: 0.0233 | Val Loss: 1.0058 | Train Acc: 99.32% | Val Acc: 76.80%

Epoch [16/20] Train Loss: 0.0237 | Val Loss: 0.9900 | Train Acc: 99.20% | Val Acc: 75.40%

Epoch [17/20] Train Loss: 0.0301 | Val Loss: 1.0669 | Train Acc: 98.98% | Val Acc: 76.20%

Epoch [18/20] Train Loss: 0.0268 | Val Loss: 1.5781 | Train Acc: 99.14% | Val Acc: 72.00%

Epoch [19/20] Train Loss: 0.0173 | Val Loss: 1.1010 | Train Acc: 99.46% | Val Acc: 74.80%

Epoch [20/20] Train Loss: 0.0140 | Val Loss: 1.0322 | Train Acc: 99.60% | Val Acc: 76.00%

Early stopping triggered at epoch 20

Best results for resnet18:

Train Loss: 0.0058 | Val Loss: 0.9056 | Train Acc: 99.84% | Val Acc: 79.90%

Training Time: 379.45 seconds

Here's another more complex transform function. Here's the code and log:

train_transform = transforms.Compose([

transforms.Resize(224),

transforms.RandomResizedCrop(224, scale=(0.6, 1.0)), # zoom in/out

transforms.RandomHorizontalFlip(),

transforms.ColorJitter(brightness=0.4, contrast=0.4, saturation=0.4, hue=0.1),

transforms.RandomGrayscale(p=0.1),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

========== CONFIG ==========

NUM_CLASSES = 10

TRAINING_SAMPLE_PER_CLASS = 100

VALIDATION_SAMPLE_PER_CLASS = 100

BATCH_SIZE = 256

EPOCHS = 20

LR = 0.001

TRAINABLE_LAYERS = 2

EARLY_STOP_PATIENCE = 10

N_AUG = 3

DEVICE = cuda

============================

==============================

Training model: resnet18

==============================

Epoch [01/20] Train Loss: 1.0339 | Val Loss: 2.3851 | Train Acc: 65.23% | Val Acc: 60.50%

Epoch [02/20] Train Loss: 0.4568 | Val Loss: 0.9064 | Train Acc: 84.13% | Val Acc: 73.70%

Epoch [03/20] Train Loss: 0.3050 | Val Loss: 0.9382 | Train Acc: 90.27% | Val Acc: 74.40%

Epoch [04/20] Train Loss: 0.2116 | Val Loss: 0.7938 | Train Acc: 92.57% | Val Acc: 75.20%

Epoch [05/20] Train Loss: 0.1569 | Val Loss: 0.8974 | Train Acc: 95.10% | Val Acc: 74.20%

Epoch [06/20] Train Loss: 0.1196 | Val Loss: 0.7643 | Train Acc: 96.20% | Val Acc: 77.60%

Epoch [07/20] Train Loss: 0.1148 | Val Loss: 0.7781 | Train Acc: 96.23% | Val Acc: 78.60%

Epoch [08/20] Train Loss: 0.0841 | Val Loss: 0.7664 | Train Acc: 97.23% | Val Acc: 79.80%

Epoch [09/20] Train Loss: 0.0722 | Val Loss: 0.8293 | Train Acc: 97.83% | Val Acc: 76.70%

Epoch [10/20] Train Loss: 0.0686 | Val Loss: 0.8533 | Train Acc: 97.77% | Val Acc: 79.10%

Epoch [11/20] Train Loss: 0.0717 | Val Loss: 0.8412 | Train Acc: 97.93% | Val Acc: 78.60%

Epoch [12/20] Train Loss: 0.0684 | Val Loss: 1.0308 | Train Acc: 98.00% | Val Acc: 74.70%

Epoch [13/20] Train Loss: 0.0532 | Val Loss: 0.8491 | Train Acc: 98.27% | Val Acc: 78.00%

Epoch [14/20] Train Loss: 0.0564 | Val Loss: 0.8526 | Train Acc: 98.37% | Val Acc: 76.70%

Epoch [15/20] Train Loss: 0.0539 | Val Loss: 0.8671 | Train Acc: 98.47% | Val Acc: 77.70%

Epoch [16/20] Train Loss: 0.0532 | Val Loss: 0.9870 | Train Acc: 98.23% | Val Acc: 77.40%

Epoch [17/20] Train Loss: 0.0577 | Val Loss: 0.9720 | Train Acc: 98.50% | Val Acc: 77.90%

Epoch [18/20] Train Loss: 0.0682 | Val Loss: 1.1606 | Train Acc: 97.90% | Val Acc: 73.90%

Early stopping triggered at epoch 18

Best results for resnet18:

Train Loss: 0.0841 | Val Loss: 0.7664 | Train Acc: 97.23% | Val Acc: 79.80%

Training Time: 254.64 seconds

Any comments? Feel free to participate below in the Facebook comment section.